LossFunction

is an option for NetTrain that specifies how to compare actual and requested outputs from a neural net.

Details

- LossFunction specifies the quantity that is minimized during a training.

- In NetTrain[net,data,…], possible settings for LossFunction include:

-

Automatic automatically attach loss layers to all outputs, or use existing loss outputs (default) "port" interpret the given port as a loss losslayer attach a user defined loss layer to the net's output "port"losslayer compute loss from one output of a multi-output net {lspec1,lspec2,…} minimize several losses simultaneously {…,lpeciScaled[r],…} scale individual losses by a factor r - When specifying a single loss layer with LossFunction->losslayer, the net being trained must have exactly one output port.

- Layers that can be used as losslayer include:

-

Automatic select a loss automatically (default) MeanAbsoluteLossLayer[] mean of distance between output and target MeanSquaredLossLayer[] mean of squared distance between output and target CrossEntropyLossLayer[form] distance between output and target probabilities ContrastiveLossLayer[] how well output is maximized or minimized conditioned on target NetGraph[…], NetChain[…], ThreadingLayer[…], etc. any network with an "Input" and optional "Target" port - When a loss layer is chosen automatically for a port, the loss layer to use is based on the layer within the net whose output is connected to the port, as follows:

-

SoftmaxLayer[…] use CrossEntropyLossLayer["Index"] ElementwiseLayer[LogisticSigmoid] use CrossEntropyLossLayer["Binary"] NetPairEmbeddingOperator[…] use ContrastiveLossLayer[] other non-loss layers use MeanSquaredLossLayer[] loss layers use unchanged - In general, the output of a loss port is a scalar value. When it is an array, the loss value is computed as the sum of elements.

- A user-defined loss layer can be a network with several output ports. Then the sum of all the output port values will be used as the loss to minimize, and each port will be reported as a separate sub-loss.

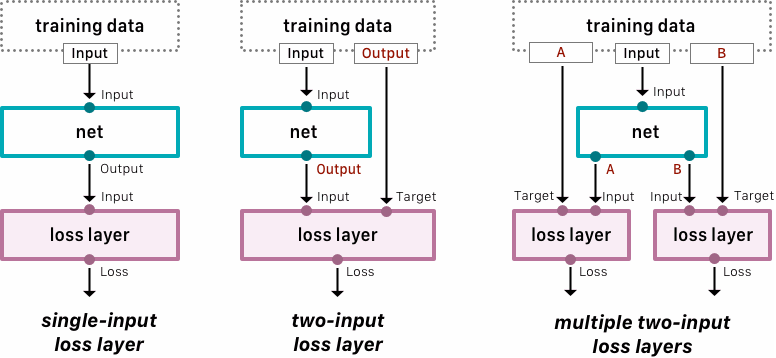

- If the attached loss layer has one input port ("Input"), it will be attached to the output of the net, and the keys of the training data should supply ports to feed the input of the net only (see figure below, left side).

- If the attached loss layer has two input ports ("Input" and "Target"), the input will be attached to the output of the net and the target will be fed from the training data, using the name of the output port of the net (see figure below, center). Typically, this name is "Output", and so the usual case is training data of the form <"Input"->{in1,in2,…},"Output"->{out1,out2,…}>, which is often written as {in1,in2,…}->{out1,out2,…} or {in1->out1,in2->out2,…}.

- If multiple such layers are connected, there should be one port in the training data to feed the target of each layer (see figure below, right side).

-

Examples

Basic Examples (4)

Train a simple net using MeanSquaredLossLayer, the default loss applied to an output when it is not produced by a SoftmaxLayer:

Evaluate the trained net on a set of inputs:

Specify a different loss layer to attach to the output of the network. First create a loss layer:

The loss layer takes an input and a target and produces a loss:

Use this loss layer during training:

Evaluate the trained net on a set of inputs:

Create a net that takes a vector of length 2 and produces one of the class labels Less or Greater:

NetTrain will automatically use a CrossEntropyLossLayer object with the correct class encoder:

Evaluate the trained net on a set of inputs:

Create an explicit loss layer that expects the targets to be in the form of probabilities for each class:

The training data should now consist of vectors of probabilities instead of symbolic classes:

Evaluate the trained net on a set of inputs:

Start with an "evaluation net" to be trained:

Create a "loss net" that explicitly computes the loss of the evaluation net (here, the custom loss is equivalent to MeanSquaredLossLayer):

Train this net on some synthetic data, specifying that the output port named "Loss" should be interpreted as a loss:

Obtain the trained "evaluation" network using NetExtract:

Part syntax can also be used:

Plot the net's output on the plane:

Create a training net that computes both an output and multiple explicit losses:

This network requires an input and a target in order to produce the output and losses:

Train using a specific output as loss, ignoring all other outputs:

Measure the loss on a single pair after training:

Specify multiple losses to be trained jointly:

Use NetTake to remove all but the desired output and input:

Text

Wolfram Research (2018), LossFunction, Wolfram Language function, https://reference.wolfram.com/language/ref/LossFunction.html (updated 2022).

CMS

Wolfram Language. 2018. "LossFunction." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2022. https://reference.wolfram.com/language/ref/LossFunction.html.

APA

Wolfram Language. (2018). LossFunction. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/LossFunction.html