PitchRecognize[audio]

recognizes the main pitch in audio, returning it as a TimeSeries object.

PitchRecognize[audio,spec]

returns the main pitch processed according to the specified spec.

PitchRecognize[video,…]

recognizes the main pitch in the first audio track in video.

PitchRecognize

PitchRecognize[audio]

recognizes the main pitch in audio, returning it as a TimeSeries object.

PitchRecognize[audio,spec]

returns the main pitch processed according to the specified spec.

PitchRecognize[video,…]

recognizes the main pitch in the first audio track in video.

Details and Options

- PitchRecognize is also known as pitch detection or pitch tracking.

- PitchRecognize assumes that the signal contains a single pitch at any given time.

- If a pitch is not detected at a specific time, a Missing[] value is returned for that time.

- The pitch specification spec can be any of the following:

-

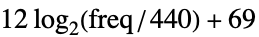

"Frequency" a frequency in hertz (default) "FrequencyMagnitude" magnitude of a frequency in hertz "QuantizedFrequency" a quantized frequency in hertz {"QuantizedFrequency",list} a frequency in hertz quantized to the values of list "MIDI"  , where freq is the frequency in hertz

, where freq is the frequency in hertz"QuantizedMIDI" rounded MIDI values "SoundNotePitch" pitch specification with 0 corresponding to the middle C (as in SoundNote) f arbitrary function f applied to recognized frequencies - The following options can be given:

-

AcceptanceThreshold Automatic minimum probability to consider acceptable Alignment Center alignment of the timestamps with partitions AllowedFrequencyRange Automatic min and max frequency MetaInformation None include additional metainformation Method Automatic method to be used MissingDataMethod None method to use for missing values PartitionGranularity Automatic audio partitioning specification PerformanceGoal "Speed" aspects of performance to try to optimize ResamplingMethod Automatic the method to use for resampling paths - Possible settings for Method include:

-

Automatic automatically chosen "CREPE" a neural network trained for pitch estimation "Speech" a vocoder-based speech fundamental frequency estimation "YIN" a YIN-based algorithm - Use Method->{"CREPE",TargetDevice->dev} to perform the neural net evaluation on device dev.

- PitchRecognize uses machine learning. Its methods, training sets and biases included therein may change and yield varied results in different versions of the Wolfram Language.

- PitchRecognize may download resources that will be stored in your local object store at $LocalBase, and can be listed using LocalObjects[] and removed using ResourceRemove.

Examples

open all close allScope (5)

Recognize the pitch and return frequencies in Hz:

Express the result as a MIDI specification:

Recognize the quantized pitch:

Frequency in Hz quantized on the frequencies corresponding to the standard MIDI pitches:

Quantized SoundNote specification:

Quantized frequency using a specific list of values:

Recognize modified pitch using an arbitrary function:

Define a MIDI specification where the central A is set to 432 Hz:

Options (7)

AcceptanceThreshold (1)

Set a higher AcceptanceThreshold to keep only the most certain predictions:

Method (4)

PerformanceGoal (1)

Use the "CREPE" method with PerformanceGoal set to "Speed" to use a smaller and faster network trained on the same dataset:

The speed of the computation is significantly faster, but the result is still accurate:

Applications (4)

Recognize the pitch of a human voice:

Compute the pitch TimeSeries:

A female voice typically has a higher pitch compared to a male voice:

Check the tuning of the cello used in an audio recording:

Compute the pitch TimeSeries:

Compute the mean of the pitch:

The expected frequency for an A played on the cello is 110Hz. This cello was nicely tuned.

Transcribe a monophonic instrument:

Compute the pitch and express it as a SoundNote specification:

Delete all the Missing[] values:

Get the pitch and boundary of each played note:

Create a Sound object:

Create a sound using a different instrument:

Recognize pitch to reconstruct a signal. Start with a recording of a Bach fugue:

Track the pitch of the signal using the "CREPE" method and return quantized frequencies:

Properties & Relations (1)

The "CREPE" method uses the CREPE network from the Wolfram Neural Net Repository:

Possible Issues (1)

If the "YIN" method is used, an octave error in the recognition is possible:

Adjust the value of the AllowedFrequencyRange option to mitigate the issue:

Related Guides

Text

Wolfram Research (2019), PitchRecognize, Wolfram Language function, https://reference.wolfram.com/language/ref/PitchRecognize.html (updated 2024).

CMS

Wolfram Language. 2019. "PitchRecognize." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2024. https://reference.wolfram.com/language/ref/PitchRecognize.html.

APA

Wolfram Language. (2019). PitchRecognize. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/PitchRecognize.html

BibTeX

@misc{reference.wolfram_2025_pitchrecognize, author="Wolfram Research", title="{PitchRecognize}", year="2024", howpublished="\url{https://reference.wolfram.com/language/ref/PitchRecognize.html}", note=[Accessed: 20-February-2026]}

BibLaTeX

@online{reference.wolfram_2025_pitchrecognize, organization={Wolfram Research}, title={PitchRecognize}, year={2024}, url={https://reference.wolfram.com/language/ref/PitchRecognize.html}, note=[Accessed: 20-February-2026]}