Parallelize[expr]

evaluates expr using automatic parallelization.

Parallelize

Parallelize[expr]

evaluates expr using automatic parallelization.

Details and Options

- Parallelize[expr] automatically distributes different parts of the evaluation of expr among different available kernels and processors.

- Parallelize[expr] normally gives the same result as evaluating expr, except for side effects during the computation.

- Parallelize has attribute HoldFirst, so that expressions are not evaluated before parallelization.

- The following options can be given:

-

Method Automatic granularity of parallelization DistributedContexts $DistributedContexts contexts used to distribute symbols to parallel computations ProgressReporting $ProgressReporting whether to report the progress of the computation - The Method option specifies the parallelization method to use. Possible settings include:

-

"CoarsestGrained" break the computation into as many pieces as there are available kernels "FinestGrained" break the computation into the smallest possible subunits "EvaluationsPerKernel"->e break the computation into at most e pieces per kernel "ItemsPerEvaluation"->m break the computation into evaluations of at most m subunits each Automatic compromise between overhead and load balancing - Method->"CoarsestGrained" is suitable for computations involving many subunits, all of which take the same amount of time. It minimizes overhead but does not provide any load balancing.

- Method->"FinestGrained" is suitable for computations involving few subunits whose evaluations take different amounts of time. It leads to higher overhead but maximizes load balancing.

- The DistributedContexts option specifies which symbols appearing in expr have their definitions automatically distributed to all available kernels before the computation.

- The default value is DistributedContexts:>$DistributedContexts with $DistributedContexts:=$Context, which distributes definitions of all symbols in the current context, but does not distribute definitions of symbols from packages.

- The ProgressReporting option specifies whether to report the progress of the parallel computation.

- The default value is ProgressReporting:>$ProgressReporting.

- Parallelize[f[…]] parallelizes these functions that operate on a list element by element: Apply, AssociationMap, Cases, Count, FreeQ, KeyMap, KeySelect, KeyValueMap, Map, MapApply, MapIndexed, MapThread, Comap, AssociationComap, ComapApply, MemberQ, Pick, Scan, Select and Through.

- Parallelize[iter] parallelizes the iterators Array, Do, Product, Sum, Table.

- Parallelize[list] evaluates the elements of list in parallel.

- Parallelize[f[…]] can parallelize listable and associative functions and inner and outer products. »

- Parallelize[cmd1;cmd2;…] wraps Parallelize around each cmdi and evaluates these in sequence. »

- Parallelize[s=expr] is converted to s=Parallelize[expr].

- Parallelize[expr] evaluates expr sequentially if expr is not one of the cases recognized by Parallelize.

Parallelize Options

Parallelize Scope

Examples

open all close allBasic Examples (4)

Scope (23)

Listable Functions (1)

Structure-Preserving Functions (8)

Many functional programming constructs that preserve list structure parallelize:

f@@@list is equivalent to MapApply[f,list]:

The result need not have the same length as the input:

Without a function, Parallelize simply evaluates the elements in parallel:

Reductions (4)

Count the number of primes up to one million:

Check whether 93 occurs in a list of the first 100 primes:

Check whether a list is free of 5:

The argument does not have to be an explicit List:

Inner and Outer Products (2)

Iterators (3)

Associative Functions (1)

Functions with the attribute Flat automatically parallelize:

Functions for Associations (4)

Generalizations & Extensions (4)

Options (13)

DistributedContexts (5)

By default, definitions in the current context are distributed automatically:

Do not distribute any definitions of functions:

Distribute definitions for all symbols in all contexts appearing in a parallel computation:

Distribute only definitions in the given contexts:

Restore the value of the DistributedContexts option to its default:

Method (6)

Break the computation into the smallest possible subunits:

Break the computation into as many pieces as there are available kernels:

Break the computation into at most 2 evaluations per kernel for the entire job:

Break the computation into evaluations of at most 5 elements each:

The default option setting balances evaluation size and number of evaluations:

Calculations with vastly differing runtimes should be parallelized as finely as possible:

A large number of simple calculations should be distributed into as few batches as possible:

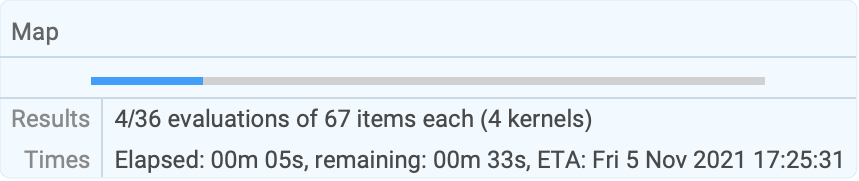

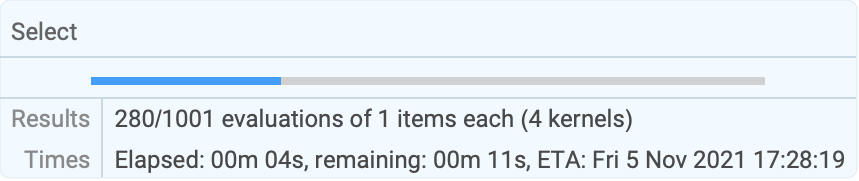

ProgressReporting (2)

Do not show a temporary progress report:

Use Method"FinestGrained" for the most accurate progress report:

| |

Applications (4)

Properties & Relations (7)

For data parallel functions, Parallelize is implemented in terms of ParallelCombine:

Parallel speedup can be measured with a calculation that takes a known amount of time:

Define a number of tasks with known runtimes:

The time for a sequential execution is the sum of the individual times:

Measure the speedup for parallel execution:

Finest-grained scheduling gives better load balancing and higher speedup:

Scheduling large tasks first gives even better results:

Form the arithmetic expression 1⊗2⊗3⊗4⊗5⊗6⊗7⊗8⊗9 for ⊗ chosen from +, –, *, /:

Each list of arithmetic operations gives a simple calculation:

Find all sequences of arithmetic operations that give 0:

Display the corresponding expressions:

Functions defined interactively are automatically distributed to all kernels when needed:

Distribute definitions manually and disable automatic distribution:

For functions from a package, use ParallelNeeds rather than DistributeDefinitions:

Set up a random number generator that is suitable for parallel use and initialize each kernel:

Possible Issues (8)

Expressions that cannot be parallelized are evaluated normally:

Side effects cannot be used in the function mapped in parallel:

Use a shared variable to support side effects:

If no subkernels are available, the result is computed on the master kernel:

If a function used is not distributed first, the result may still appear to be correct:

Only if the function is distributed is the result actually calculated on the available kernels:

Definitions of functions in the current context are distributed automatically:

Definitions from contexts other than the default context are not distributed automatically:

Use DistributeDefinitions to distribute such definitions:

Alternatively, set the DistributedContexts option to include all contexts:

Explicitly distribute the definition of a function:

The modified definition is automatically distributed:

Suppress the automatic distribution of definitions:

Symbols defined only on the subkernels are not distributed automatically:

The value of $DistributedContexts is not used in Parallelize:

Set the value of the DistributedContexts option of Parallelize:

Related Guides

Related Workflows

- Run a Computation in Parallel

History

Introduced in 2008 (7.0) | Updated in 2010 (8.0) ▪ 2021 (13.0)

Text

Wolfram Research (2008), Parallelize, Wolfram Language function, https://reference.wolfram.com/language/ref/Parallelize.html (updated 2021).

CMS

Wolfram Language. 2008. "Parallelize." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2021. https://reference.wolfram.com/language/ref/Parallelize.html.

APA

Wolfram Language. (2008). Parallelize. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/Parallelize.html

BibTeX

@misc{reference.wolfram_2025_parallelize, author="Wolfram Research", title="{Parallelize}", year="2021", howpublished="\url{https://reference.wolfram.com/language/ref/Parallelize.html}", note=[Accessed: 15-February-2026]}

BibLaTeX

@online{reference.wolfram_2025_parallelize, organization={Wolfram Research}, title={Parallelize}, year={2021}, url={https://reference.wolfram.com/language/ref/Parallelize.html}, note=[Accessed: 15-February-2026]}