"KernelDensityEstimation" (Machine Learning Method)

- Method for LearnDistribution.

- Models probability density with a mixture of simple distributions.

Details & Suboptions

- "KernelDensityEstimation" is a nonparametric method that models the probability density of a numeric space with a mixture of simple distributions (called kernels) centered around each training example, as in KernelMixtureDistribution.

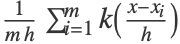

- The probability density function for a vector

is given by

is given by  for a kernel function

for a kernel function  , kernel size

, kernel size  and a number of training examples m.

and a number of training examples m. - The following options can be given:

-

Method "Fixed" kernel size method "KernelSize" Automatic size of the kernels when Method"Fixed" "KernelType" "Gaussian" type of kernel used "NeighborsNumber" Automatic kernel size expressed as a number of neighbors - Possible settings for "KernelType" include:

-

"Gaussian" each kernel is a Gaussian distribution "Ball" each kernel is a uniform distribution on a ball - Possible settings for Method include:

-

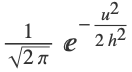

"Adaptive" kernel sizes can differ from each other "Fixed" all kernels have the same size - When "KernelType""Gaussian", each kernel is a spherical Gaussian (product of independent normal distributions

), and "KernelSize" h refers to the standard deviation of the normal distribution.

), and "KernelSize" h refers to the standard deviation of the normal distribution. - When "KernelType""Ball", each kernel is a uniform distribution inside a sphere, and "KernelSize" refers to the radius of the sphere.

- The value of "NeighborsNumber"k is converted into kernel size(s), so that a kernel centered around a training example typically "contains" k other training examples. If "KernelType""Ball", "contains" refers to examples that are inside the ball. If "KernelType""Gaussian", "contains" refers to examples that are inside a ball of radius h

where n is the dimension of the data.

where n is the dimension of the data. - When Method"Fixed" and "NeighborsNumber"k, a unique kernel size is found such that training examples contain on average k other examples.

- When Method"Adaptive" and "NeighborsNumber"k, each training example adapts its kernel size such that it contains about k other examples.

- Because of preprocessing, the "NeighborsNumber" option is typically a more convenient way to control kernel sizes than "KernelSize". When Method"Fixed", the value of "KernelSize" supersedes the value of "NeighborsNumber".

- Information[LearnedDistribution[…],"MethodOption"] can be used to extract the values of options chosen by the automation system.

- LearnDistribution[…,FeatureExtractor"Minimal"] can be used to remove most preprocessing and directly access the method.

Examples

open all close allBasic Examples (3)

Train a "KernelDensityEstimation" distribution on a numeric dataset:

Look at the distribution Information:

Obtain an option value directly:

Compute the probability density for a new example:

Plot the PDF along with the training data:

Generate and visualize new samples:

Train a "KernelDensityEstimation" distribution on a two-dimensional dataset:

Plot the PDF along with the training data:

Use SynthesizeMissingValues to impute missing values using the learned distribution:

Train a "KernelDensityEstimation" distribution on a nominal dataset:

Because of the necessary preprocessing, the PDF computation is not exact:

Use ComputeUncertainty to obtain the uncertainty on the result:

Increase MaxIterations to improve the estimation precision: