"LinearRegression" (Machine Learning Method)

- Method for Predict.

- Predict values using a linear combination of features.

Details & Suboptions

- The linear regression predicts the numerical output y using a linear combination of numerical features

. The conditional probability

. The conditional probability  is modeled according to

is modeled according to  , with

, with  .

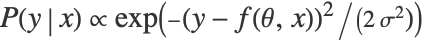

. - The estimation of the parameter vector θ is done by minimizing the loss function

![1/2sum_(i=1)^m(y_i-f(theta,x_i))^2+lambda_1sum_(i=1)^nTemplateBox[{{theta, _, i}}, Abs]+(lambda_2)/2 sum_(i=1)^ntheta_i^2 1/2sum_(i=1)^m(y_i-f(theta,x_i))^2+lambda_1sum_(i=1)^nTemplateBox[{{theta, _, i}}, Abs]+(lambda_2)/2 sum_(i=1)^ntheta_i^2](Files/LinearRegression.en/5.png) , where m is the number of examples and n is the number of numerical features.

, where m is the number of examples and n is the number of numerical features. - The following suboptions can be given:

-

"L1Regularization" 0 value of  in the loss function

in the loss function"L2Regularization" Automatic value of  iin the loss function

iin the loss function"OptimizationMethod" Automatic what optimization method to use - Possible settings for the "OptimizationMethod" option include:

-

"NormalEquation" linear algebra method "StochasticGradientDescent" stochastic gradient method "OrthantWiseQuasiNewton" orthant-wise quasi-Newton method - For this method, Information[PredictorFunction[…],"Function"] gives a simple expression to compute the predicted value from the features.

Examples

open all close allBasic Examples (2)

Train a predictor on labeled examples:

Look at the Information:

Generate two-dimensional data:

Train a predictor function on it:

Compare the data with the predicted values and look at the standard deviation: