-

See Also

- LearnDistribution

- LearnedDistribution

- MultinormalDistribution

- NormalDistribution

- DimensionReduction

- AnomalyDetection

- Covariance

- SynthesizeMissingValues

- RandomVariate

- RarerProbability

-

- Methods

- GaussianMixture

- ContingencyTable

- KernelDensityEstimation

- DecisionTree

- LinearRegression

- LogisticRegression

-

-

See Also

- LearnDistribution

- LearnedDistribution

- MultinormalDistribution

- NormalDistribution

- DimensionReduction

- AnomalyDetection

- Covariance

- SynthesizeMissingValues

- RandomVariate

- RarerProbability

-

- Methods

- GaussianMixture

- ContingencyTable

- KernelDensityEstimation

- DecisionTree

- LinearRegression

- LogisticRegression

-

See Also

"Multinormal" (Machine Learning Method)

- Method for LearnDistribution.

- Models the probability density using a multivariate normal (Gaussian) distribution.

Details & Suboptions

- "Multinormal" models the probability density of a numeric space using a multivariate normal distribution as in MultinormalDistribution.

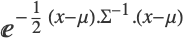

- The probability density for vector

is proportional to

is proportional to  , where

, where  and

and  are learned parameters. If n is the size of the input numeric vector,

are learned parameters. If n is the size of the input numeric vector,  is an n×n symmetric positive definite matrix called covariance, and

is an n×n symmetric positive definite matrix called covariance, and  is a size-n vector.

is a size-n vector. - The following options can be given:

-

"CovarianceType" "Full" type of constraint on the covariance matrix

"IntrinsicDimension" Automatic effective dimensionality of the data to assume - Possible settings for "CovarianceType" include:

-

"Diagonal" only diagonal elements are learned (the others are set to 0) "Full" all n×n elements are learned "Spherical" only diagonal elements are learned and are set to be equal - Possible settings for "IntrinsicDimension" include:

-

Automatic try several possible dimensions "Heuristic" use a heuristic based on the data k use the specified dimension - When "CovarianceType""Full" and "IntrinsicDimension"k, with k<n, a linear dimensionality reduction is performed on the data. A full k×k covariance matrix is used to model data in the reduced space (which can be interpreted as the "signal" part), while a spherical covariance matrix is used to model the n-k remaining dimensions (which can be interpreted as the "noise" part).

- The value of "IntrinsicDimension" is ignored when "CovarianceType""Diagonal" or "CovarianceType""Spherical".

- Information[LearnedDistribution[…],"MethodOption"] can be used to extract the values of options chosen by the automation system.

- LearnDistribution[…,FeatureExtractor"Minimal"] can be used to remove most preprocessing and directly access the method.

Examples

open all close allBasic Examples (3)

Train a "Multinormal" distribution on a numeric dataset:

Look at the distribution Information:

Obtain an option value directly:

Compute the probability density for a new example:

Plot the PDF along with the training data:

Generate and visualize new samples:

Train a "Multinormal" distribution on a two-dimensional dataset:

Plot the PDF along with the training data:

Use SynthesizeMissingValues to impute missing values using the learned distribution:

Train a "Multinormal" distribution on a nominal dataset:

Because of the necessary preprocessing, the PDF computation is not exact:

Use ComputeUncertainty to obtain the uncertainty on the result:

Increase MaxIterations to improve the estimation precision:

Options (2)

"CovarianceType" (1)

See Also

LearnDistribution LearnedDistribution MultinormalDistribution NormalDistribution DimensionReduction AnomalyDetection Covariance SynthesizeMissingValues RandomVariate PDF RarerProbability

Methods: GaussianMixture ContingencyTable KernelDensityEstimation DecisionTree LinearRegression LogisticRegression