PredictorMeasurements[predictor,testset,prop]

gives measurements associated with the property prop when predictor is evaluated on testset.

PredictorMeasurements[predictor,testset]

yields a measurement report that can be applied to any property.

PredictorMeasurements[data,…]

use predictions data instead of a predictor.

PredictorMeasurements[…,{prop1,prop2,…}]

gives properties prop1, prop2, etc.

PredictorMeasurements

PredictorMeasurements[predictor,testset,prop]

gives measurements associated with the property prop when predictor is evaluated on testset.

PredictorMeasurements[predictor,testset]

yields a measurement report that can be applied to any property.

PredictorMeasurements[data,…]

use predictions data instead of a predictor.

PredictorMeasurements[…,{prop1,prop2,…}]

gives properties prop1, prop2, etc.

Details and Options

- Measurements are used to determine the performance of a predictor on data that was not used for training purposes (the test set).

- Possible measurements include classification metrics (accuracy, likelihood, etc.), visualizations (confusion matrix, ROC curve, etc.) or specific examples (such as the worst classified examples).

- The predictor is typically a PredictorFunction object as generated by Predict.

- In PredictorMeasurements[data,…], the predictions data can have the following forms:

-

{y1,y2,…} predictions from a predictor (human, algorithm, etc.) {dist1,dist2,…} predictive distributions obtained by a predictor - PredictorMeasurements[…,opts] specifies that the predictor should use the options opts when applied to the test set. Possible options are as given in PredictorFunction.

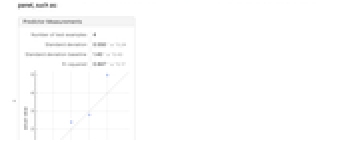

- PredictorMeasurements[predictor,testset] returns a PredictorMeasurementsObject[…] that displays as a report panel, such as:

-

- PredictorMeasurementsObject[…][prop] can be used to look up prop from a PredictorMeasurementsObject. When repeated property lookups are required, this is typically more efficient than using PredictorMeasurements every time.

- PredictorMeasurementsObject[…][prop,opts] specifies that the predictor should use the options opts when applied to the test set. These options supersede original options given to PredictorMeasurements.

- PredictorMeasurements has the same options as PredictorFunction[…], with the following additions:

-

Weights Automatic weights to be associated with test set examples ComputeUncertainty False whether measures should be given with their statistical uncertainty - When ComputeUncertaintyTrue, numerical measures will be returned as Around[result,err], where err represents the standard error (corresponding to a 68% confidence interval) associated with measure result.

- Possible settings for Weights include:

-

Automatic associates weight 1 with all test examples {w1,w2,…} associates weight wi with the i  test examples

test examples - Changing the weight of a test example from 1 to 2 is equivalent to duplicating the example.

- Weights affect measures as well as their uncertainties.

- Properties returning a single numeric value related to prediction abilities on the test set include:

-

"StandardDeviation" root mean square of the residuals "StandardDeviationBaseline" standard deviation of test set values "LogLikelihood" log-likelihood of the model given the test data "MeanCrossEntropy" mean cross entropy over test examples "MeanDeviation" mean absolute value of the residuals "MeanSquare" mean square of the residuals "RSquared" coefficient of determination "FractionVarianceUnexplained" fraction of variance unexplained "Perplexity" exponential of the mean cross entropy "RejectionRate" fraction of examples predicted as Indeterminate "GeometricMeanProbabilityDensity" geometric mean of the actual-class probability densities - Test examples classified as Indeterminate will be discarded when measuring properties related to prediction abilities on the test set, such as "StandardDeviation" or "MeanCrossEntropy".

- Properties returning graphics include:

-

"ComparisonPlot" plot of predicted values versus test values "ICEPlots" Individual Conditional Expectation (ICE) plots "ProbabilityDensityHistogram" histogram of actual-class probability densities "Report" panel reporting main measurements "ResidualHistogram" histogram of residuals "ResidualPlot" plot of the residuals "SHAPPlots" Shapley additive feature explanations plot for each class - Timing-related properties include:

-

"EvaluationTime" time needed to predict one example of the test set "BatchEvaluationTime" marginal time to predict one example in a batch - Properties returning one value for each test-set example include:

-

"Residuals" list of differences between predicted and test values "ProbabilityDensities" actual-class prediction probability densities "SHAPValues" Shapley additive feature explanations for each example - "SHAPValues" assesses the contribution of features by comparing predictions with different sets of features removed and then synthesized. The option MissingValueSynthesis can be used to specify how the missing features are synthesized. SHAP explanations are given as deviation from the training output mean. "SHAPValues"n can be used to control the number of samples used for the numeric estimations of SHAP explanations.

- Properties returning examples from the test set include:

-

"BestPredictedExamples" examples having the highest actual-class probability density "Examples" all test examples "Examples"{i1,i2} all examples in the interval i1 predicted in the interval i2 "LeastCertainExamples" examples having the highest distribution entropy "MostCertainExamples" examples having the lowest distribution entropy "WorstPredictedExamples" examples having the lowest actual-class probability density - Examples are given in the form inputivaluei, where valuei is the actual value.

- Properties such as "WorstPredictedExamples" or "MostCertainExamples" output up to 10 examples. PredictorMeasurementsObject[…][propn] can be used to output n examples.

- Other properties include:

-

"PredictorFunction" PredictorFunction[…] being measured "Properties" list of measurement properties available

Examples

open all close allBasic Examples (3)

Train a predictor on a training set:

Measure the standard deviation of the predictor on the test set:

Visualize a scatter plot of the actual and predicted values:

Measure several properties at once:

Train a predictor on a training set:

Generate a measurement object of the predictor on the test set:

Obtain the list of measurement properties available:

Measure the standard deviation of the predictor on the test set:

Obtain the standard deviation along with its statistical uncertainty due to finite test-set size:

Measure the standard deviation directly from classified examples:

Scope (3)

Residual-Based Metrics (1)

Visualize the residuals of predicted examples against their true values:

Measure the standard deviation:

This is equivalent to computing the root mean square of the residuals:

Obtain the statistical uncertainty on this measure:

Compare the standard deviation to a baseline (always predicting the mean of the test-set values):

This is equivalent to the proportion of variance that is explained:

Comparison Plot and Example Extraction (1)

Create a training set and a test set on the Boston homes data:

Train a model on the training set:

Create a classifier measurements object for this classifier on the test set:

Find the two test examples that have the worst predictions:

Find the two test examples that have the best predictions:

Compare the predicted and correct values:

Extract the examples whose true values are between 30 and 40 and whose predictions are between 40 and 50:

Probabilistic Metrics (1)

Create and visualize an artificial dataset from the expression Cos[x*y]:

Split the dataset into a training set and a test set:

Train a predictor on the training set:

Measure the log-likelihood of the test set (total log-PDF of actual value):

Measure the mean cross-entropy:

The mean cross-entropy is the average negative log-likelihood:

Options (5)

IndeterminateThreshold (1)

Create an artificial dataset and visualize it:

Split the dataset into a training set and a test set:

Train a predictor on the training set:

Plot the predicted distribution for a few feature values:

Compute the root mean square of the residuals:

Perform the same computation with a different threshold value for the predictor:

This operation can also be done on the PredictorMeasurementsObject:

Plot the standard deviation and the rejection rate as a function of the threshold:

TargetDevice (1)

Train a predictor using a neural network:

Measure the standard deviation of the predictor on a test set for different setting of TargetDevice:

UtilityFunction (1)

Define a training and a test set:

Train a predictor on the training set:

Define and visualize a utility function that penalizes the predicted value's being smaller than the actual value:

Compute the residuals of the predictor on the test set with this utility function:

The residuals with the default utility function are higher:

The utility function can also be specified when using the PredictorMeasurementsObject:

"Uncertainty" (1)

Train a predictor on the "WineQuality" dataset:

Generate a PredictorMeasurements[…] object using a test set:

Obtain a measure of the standard deviation along with its uncertainty:

Obtain a measure of other properties along with their uncertainties:

Applications (2)

Load a dataset of the average monthly temperature as a function of the city, the year and the month:

Split the dataset into a training set and a test set:

Train a predictor on the training set:

Generate a PredictorMeasurementsObject from the predictor and the test set:

Compute the mean cross entropy of the classifier on the test set:

Visualize the scatter plot of the test values as a function of the predicted values:

Extract the test examples that are in a given region of the comparison plot:

Extract the 20 worst predicted examples:

Train a predictor that predicts the median value of properties in a neighborhood of Boston, given some features of the neighborhood:

Generate a predictor measurements object to analyze the performance of the predictor on a test set:

Plot a histogram of the residuals:

Compute the standard deviation of the predicted values from the actual values (root mean square of the residuals):

See Also

PredictorMeasurementsObject Predict Information PredictorFunction ClassifierMeasurements NetMeasurements LinearModelFit Histogram

Function Repository: CrossValidateModel

Related Guides

History

Introduced in 2014 (10.0) | Updated in 2017 (11.1) ▪ 2018 (11.3) ▪ 2019 (12.0) ▪ 2020 (12.1) ▪ 2020 (12.2) ▪ 2021 (13.0)

Text

Wolfram Research (2014), PredictorMeasurements, Wolfram Language function, https://reference.wolfram.com/language/ref/PredictorMeasurements.html (updated 2021).

CMS

Wolfram Language. 2014. "PredictorMeasurements." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2021. https://reference.wolfram.com/language/ref/PredictorMeasurements.html.

APA

Wolfram Language. (2014). PredictorMeasurements. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/PredictorMeasurements.html

BibTeX

@misc{reference.wolfram_2025_predictormeasurements, author="Wolfram Research", title="{PredictorMeasurements}", year="2021", howpublished="\url{https://reference.wolfram.com/language/ref/PredictorMeasurements.html}", note=[Accessed: 04-February-2026]}

BibLaTeX

@online{reference.wolfram_2025_predictormeasurements, organization={Wolfram Research}, title={PredictorMeasurements}, year={2021}, url={https://reference.wolfram.com/language/ref/PredictorMeasurements.html}, note=[Accessed: 04-February-2026]}