Eigenvectors[m]

gives a list of the eigenvectors of the square matrix m.

Eigenvectors[{m,a}]

gives the generalized eigenvectors of m with respect to a.

Eigenvectors[m,k]

gives the first k eigenvectors of m.

Eigenvectors[{m,a},k]

gives the first k generalized eigenvectors.

Eigenvectors

Eigenvectors[m]

gives a list of the eigenvectors of the square matrix m.

Eigenvectors[{m,a}]

gives the generalized eigenvectors of m with respect to a.

Eigenvectors[m,k]

gives the first k eigenvectors of m.

Eigenvectors[{m,a},k]

gives the first k generalized eigenvectors.

Details and Options

- Eigenvectors finds numerical eigenvectors if m contains approximate real or complex numbers.

- For approximate numerical matrices m, the eigenvectors are normalized. »

- For exact or symbolic matrices m, the eigenvectors are not normalized. »

- Eigenvectors corresponding to degenerate eigenvalues are chosen to be linearly independent.

- For an nn matrix, Eigenvectors always returns a list of length n. The list contains each of the independent eigenvectors of the matrix, supplemented if necessary with an appropriate number of vectors of zeros. »

- Eigenvectors with numeric eigenvalues are sorted in order of decreasing absolute value of their eigenvalues.

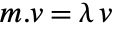

- The eigenvectors of a matrix m are nonzero eigenvectors

for which

for which  for some scalar

for some scalar  . »

. » - The generalized eigenvectors of m with respect to a are those

for which

for which  or for which

or for which  . »

. » - When matrices m and a have a dimension‐

shared null space, then

shared null space, then  of their generalized eigenvectors will be returned as zero vectors. »

of their generalized eigenvectors will be returned as zero vectors. » - Eigenvectors[m,spec] is equivalent to Take[Eigenvectors[m],spec].

- Eigenvectors[m,UpTo[k]] gives k eigenvectors, or as many as are available.

- SparseArray objects and structured arrays can be used in Eigenvectors.

- Eigenvectors has the following options and settings:

-

Cubics False whether to use radicals to solve cubics Method Automatic method to use Quartics False whether to use radicals to solve quartics ZeroTest Automatic test to determine when expressions are zero - The ZeroTest option only applies to exact and symbolic matrices.

- Explicit Method settings for approximate numeric matrices include:

-

"Arnoldi" Arnoldi iterative method for finding a few eigenvalues "Banded" direct banded matrix solver for Hermitian matrices "Direct" direct method for finding all eigenvalues "FEAST" FEAST iterative method for finding eigenvalues in an interval (applies to Hermitian matrices only) - The "Arnoldi" method is also known as a Lanczos method when applied to symmetric or Hermitian matrices.

- The "Arnoldi" and "FEAST" methods take suboptions Method ->{"name",opt1->val1,…}, which can be found in the Method subsection.

Examples

open all close allBasic Examples (5)

Eigenvectors of an exact, two-dimensional matrix:

Assign the eigenvectors to individual variables:

The result of multiplying each eigenvector by m is a scalar multiple of the eigenvector:

These factors are the eigenvalues of m:

Eigenvectors of a three-dimensional matrix:

Scope (20)

Basic Uses (6)

Subsets of Eigenvalues (5)

Compute the eigenvectors corresponding to the three largest eigenvalues:

Eigenvectors corresponding to the three smallest eigenvalues:

Find the eigenvectors corresponding to the 4 largest eigenvalues, or as many as there are if fewer:

Repeats are considered when extracting a subset of the eigenvalues:

The first two vectors both correspond to the eigenvalue 4:

The third corresponds to the eigenvalue 3:

Zero vectors are used when there are more eigenvalues than independent eigenvectors:

Generalized Eigenvalues (4)

Special Matrices (5)

Eigenvectors of sparse matrices:

Eigenvectors of structured matrices:

The units of a QuantityArray object are in the eigenvalues, leaving the eigenvectors dimensionless:

The eigenvectors of IdentityMatrix[n] form the standard basis for a vector space:

Eigenvectors of HilbertMatrix:

If the matrix is first numericized, the eigenvectors (but not eigenvalues) change significantly:

This is because the eigenvectors are normalized for numeric input:

Eigenvectors of a CenteredInterval matrix:

Find eigenvectors for a random representative mrep of m:

Verify that, after reordering and scaling, vecs contain rvecs:

Options (10)

Cubics (1)

Method (8)

"Arnoldi" (5)

The Arnoldi method can be used for machine- and arbitrary-precision matrices. The implementation of the Arnoldi method is based on the "ARPACK" library. It is most useful for large sparse matrices.

The following suboptions can be specified for the method "Arnoldi":

| "BasisSize" | the size of the Arnoldi basis | |

| "Criteria" | which criteria to use | |

| "MaxIterations" | the maximum number of iterations | |

| "Shift" | the Arnoldi shift | |

| "StartingVector" | the initial vector to start iterations | |

| "Tolerance" | the tolerance used to terminate iterations |

Possible settings for "Criteria" include:

| "Magnitude" | based on Abs | |

| "RealPart" | based on Re | |

| "ImaginaryPart" | based on Im | |

| "BothEnds" | a few eigenvalues from both ends of the symmetric real matrix spectrum |

Compute the largest eigenvectors using different "Criteria" settings. The matrix m has eigenvalues ![]() :

:

By default, "Criteria"->"Magnitude" selects an eigenvector corresponding to a largest-magnitude eigenvalue:

Find an eigenvector corresponding to a largest real-part eigenvalue:

Find an eigenvector corresponding to a largest imaginary-part eigenvalue:

Find two eigenvectors from both ends of the matrix spectrum:

Use "StartingVector" to avoid randomness:

Different starting vectors may converge to different eigenvectors:

Use "Shift"->μ to shift the eigenvalues by transforming the matrix ![]() to

to ![]() . This preserves the eigenvectors but changes the eigenvalues by -μ. The method compensates for the changed eigenvalues. "Shift" is typically used to find eigenpairs where there is no criteria such as largest or smallest magnitude that can select them:

. This preserves the eigenvectors but changes the eigenvalues by -μ. The method compensates for the changed eigenvalues. "Shift" is typically used to find eigenpairs where there is no criteria such as largest or smallest magnitude that can select them:

"Banded" (1)

"FEAST" (2)

The FEAST method can be used for real symmetric or complex Hermitian machine-precision matrices. The method is most useful for finding eigenvectors in a given interval.

The following suboptions can be specified for the method "FEAST":

| "ContourPoints" | select the number of contour points | |

| "Interval" | interval for finding eigenvalues | |

| "MaxIterations" | the maximum number of refinement loops | |

| "NumberOfRestarts" | the maximum number of restarts | |

| "SubspaceSize" | the initial size of subspace | |

| "Tolerance" | the tolerance to terminate refinement | |

| "UseBandedSolver" | whether to use a banded solver |

Compute eigenvectors corresponding to eigenvalues from the interval ![]() :

:

Applications (16)

The Geometry of Eigenvectors (3)

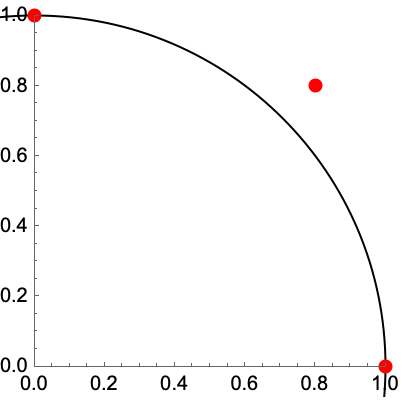

Eigenvectors with positive eigenvalues point in the same direction when acted on by the matrix:

Eigenvectors with negative eigenvalues point in the opposite direction when acted on by the matrix:

Consider the following matrix ![]() and its associated quadratic form

and its associated quadratic form ![]() :

:

The eigenvectors are the axes of the hyperbolas defined by ![]() :

:

The sign of the eigenvalue corresponds to the sign of the right-hand side of the hyperbola equation:

Here is a positive-definite quadratic form in three dimensions:

Get the symmetric matrix for the quadratic form, using CoefficientArrays:

Diagonalization (5)

Diagonalize the following matrix as ![]() . First, compute

. First, compute ![]() 's eigenvalues:

's eigenvalues:

Construct a diagonal matrix ![]() from the eigenvalues:

from the eigenvalues:

Next, compute ![]() 's eigenvectors and place them in the columns of a matrix:

's eigenvectors and place them in the columns of a matrix:

Any function of the matrix can now be computed as ![]() . For example, MatrixPower:

. For example, MatrixPower:

Similarly, MatrixExp becomes trivial, requiring only exponentiating the diagonal elements of ![]() :

:

Let ![]() be the linear transformation whose standard matrix is given by the matrix

be the linear transformation whose standard matrix is given by the matrix ![]() . Find a basis

. Find a basis ![]() for

for ![]() with the property that the representation of

with the property that the representation of ![]() in that basis

in that basis ![]() is diagonal:

is diagonal:

Let ![]() consist of the eigenvectors of

consist of the eigenvectors of ![]() , and let

, and let ![]() be the matrix whose columns are the elements of

be the matrix whose columns are the elements of ![]() :

:

![]() converts from

converts from ![]() -coordinates to standard coordinates. Its inverse converts in the reverse direction:

-coordinates to standard coordinates. Its inverse converts in the reverse direction:

Thus ![]() is given by

is given by ![]() , which is diagonal:

, which is diagonal:

Note that this is simply the diagonal matrix whose entries are the eigenvalues:

A real-valued symmetric matrix is orthogonally diagonalizable as ![]() , with

, with ![]() diagonal and real valued and

diagonal and real valued and ![]() orthogonal. Verify that the following matrix is symmetric and then diagonalize it:

orthogonal. Verify that the following matrix is symmetric and then diagonalize it:

For an orthogonal matrix, it is necessary to normalize the eigenvectors before placing them in columns:

Computing the eigenvalues, they are real, as expected:

The matrix ![]() has the eigenvalues on the diagonal:

has the eigenvalues on the diagonal:

A matrix is called normal if ![]() . Normal matrices are the most general kind of matrix that can be diagonalized by a unitary transformation. All real symmetric matrices

. Normal matrices are the most general kind of matrix that can be diagonalized by a unitary transformation. All real symmetric matrices ![]() are normal because both sides of the equality are simply

are normal because both sides of the equality are simply ![]() :

:

Show that the following matrix is normal, then diagonalize it:

Confirm using NormalMatrixQ:

Normalizing the eigenvectors and putting them in columns gives a unitary matrix:

The eigenvalues of a real normal matrix that is not also symmetric are complex valued:

Construct a diagonal matrix from the eigenvalues:

The eigenvectors of a nondiagonalizable matrix:

The dimension of the span of all the eigenvectors is less than the length, three, of the matrix:

Estimate the probability that a random 4×4 matrix of ones and zeros is not diagonalizable:

Differential Equations and Dynamical Systems (4)

Solve the system of ODEs ![]() ,

, ![]() ,

, ![]() . First, construct the coefficient matrix

. First, construct the coefficient matrix ![]() for the right-hand side:

for the right-hand side:

Find the eigenvalues and eigenvectors:

Construct a diagonal matrix whose entries are the exponential of ![]() :

:

Construct the matrix whose columns are the corresponding eigenvectors:

The general solution is ![]() for three arbitrary starting values:

for three arbitrary starting values:

Verify the solution using DSolveValue:

Suppose a particle is moving in a planar force field and its position vector ![]() satisfies

satisfies ![]() and

and ![]() , where

, where ![]() and

and ![]() are as follows. Solve this initial value problem for

are as follows. Solve this initial value problem for ![]() :

:

First, compute the eigenvalues and corresponding eigenvectors of ![]() :

:

The general solution of the system is ![]() . Use LinearSolve to determine the coefficients:

. Use LinearSolve to determine the coefficients:

Construct the appropriate linear combination of the eigenvectors:

Verify the solution using DSolveValue:

Produce the general solution of the dynamical system ![]() when

when ![]() is the following stochastic matrix:

is the following stochastic matrix:

Find the eigenvalues and eigenvectors, using Chop to discard small numerical errors:

The general solution is an arbitrary linear combination of terms of the form ![]() :

:

Verify that ![]() satisfies the dynamical equation up to numerical rounding:

satisfies the dynamical equation up to numerical rounding:

Find the Jacobian for the right-hand side of the equations:

Find the eigenvalues and eigenvectors of the Jacobian at the one in the first octant:

A function that integrates backward from a small perturbation of pt in the direction dir:

Show the stable curve for the equilibrium point on the right:

Find the stable curve for the equilibrium point on the left:

Show the stable curves along with a solution of the Lorenz equations:

Physics (4)

In quantum mechanics, states are represented by complex unit vectors and physical quantities by Hermitian linear operators. The eigenvalues represent possible observations and the squared modulus of the components with respect to eigenvectors the probabilities of those observations. For the spin operator ![]() and state

and state ![]() given, find the possible observations and their probabilities:

given, find the possible observations and their probabilities:

Find the eigenvectors and normalize them in order to compute proper projections:

Computing the eigenvalues, the possible observations are ![]() :

:

The relative probabilities are ![]() for

for ![]() and

and ![]() for

for ![]() :

:

In quantum mechanics, the energy operator is called the Hamiltonian ![]() , and a state with energy

, and a state with energy ![]() evolves according to the Schrödinger equation

evolves according to the Schrödinger equation ![]() . Given the Hamiltonian for a spin-1 particle in constant magnetic field in the

. Given the Hamiltonian for a spin-1 particle in constant magnetic field in the ![]() direction, find the state at time

direction, find the state at time ![]() of a particle that is initially in the state

of a particle that is initially in the state ![]() representing

representing ![]() :

:

Find and normalize the eigenvectors:

Computing the eigenvalues, the energy levels are ![]() and

and ![]() :

:

The state at time ![]() is the sum of each eigenstate evolving according to the Schrödinger equation:

is the sum of each eigenstate evolving according to the Schrödinger equation:

The moment of inertia is a real symmetric matrix that describes the resistance of a rigid body to rotating in different directions. The eigenvalues of this matrix are called the principal moments of inertia, and the corresponding eigenvectors (which are necessarily orthogonal) the principal axes. Find the principal moments of inertia and principal axes for the following tetrahedron:

First compute the moment of inertia:

The principle axes are the eigenvectors of ![]() :

:

The principle moments are the eigenvalues of ![]() :

:

Verify that the axes are orthogonal:

The center of mass of the tetrahedron is at the origin:

Visualize the tetrahedron and its principal axes:

A generalized eigensystem can be used to find normal modes of coupled oscillations that decouple the terms. Consider the system shown in the diagram:

By Hooke's law it obeys ![]() ,

, ![]() . Substituting in the generic solution

. Substituting in the generic solution ![]() gives rise to the matrix equation

gives rise to the matrix equation ![]() , with the stiffness matrix

, with the stiffness matrix ![]() and mass matrix

and mass matrix ![]() as follows:

as follows:

Find the eigenfrequencies and normal modes if ![]() ,

, ![]() ,

, ![]() and

and ![]() :

:

The shapes of the modes are derived from the generalized eigenvectors of ![]() with respect to

with respect to ![]() :

:

Compute the generalized eigenvalues:

The eigenfrequencies ![]() are the square roots of the eigenvalues:

are the square roots of the eigenvalues:

Construct the normal mode solutions as a generalized eigenvector times the corresponding exponential:

Verify that both satisfy the differential equation for the system:

Properties & Relations (15)

The eigenvectors returned for a numerical matrix are unit vectors:

The eigenvectors returned for exact and symbolic matrices are typically not unit vectors:

Eigenvectors[m] is effectively the second element of the pair returned by Eigensystem:

If both eigenvectors and eigenvalues are needed, it is generally more efficient to just call Eigensystem:

Eigenvectors solve ![]() , with

, with ![]() a root of the characteristic polynomial and

a root of the characteristic polynomial and ![]() the identity matrix:

the identity matrix:

Find the roots, using CharacteristicPolynomial:

To verify the equality, join solutions to the homogenous equation found with NullSpace:

Generalized eigenvectors either solve ![]() , with

, with ![]() is a root of the generalized characteristic polynomial, or obey

is a root of the generalized characteristic polynomial, or obey ![]() for some scalar

for some scalar ![]() :

:

Using CharacteristicPolynomial, there is a only single root:

A matrix m has a complete set of eigenvectors iff DiagonalizableMatrixQ[m] is True:

The following matrix is missing an eigenvector, symbolized by ![]() :

:

For an invertible matrix ![]() ,

, ![]() and

and ![]() have the same eigenvectors:

have the same eigenvectors:

Because eigenvalues are sorted by absolute value, this gives the same vectors but in the opposite order:

For an analytic function ![]() , the eigenvectors of

, the eigenvectors of ![]() are also eigenvectors of

are also eigenvectors of ![]() :

:

For example, the ![]() has the same eigenvectors:

has the same eigenvectors:

The eigenvectors of a real symmetric matrix are orthogonal:

Confirm the eigenvectors are orthogonal to each other:

The eigenvectors of a real antisymmetric matrix are orthogonal:

Confirm the eigenvectors are orthogonal to each other:

The eigenvectors of a unitary matrix are orthogonal:

Confirm the eigenvectors are orthogonal to each other:

The eigenvectors of any normal matrix are orthogonal:

Confirm the eigenvectors are orthogonal:

SingularValueDecomposition[m] is built from the eigenvectors of ![]() and

and ![]() :

:

The columns of ![]() are eigenvectors of

are eigenvectors of ![]() :

:

The columns of ![]() are eigenvectors of

are eigenvectors of ![]() :

:

Consider a matrix ![]() with a complete set of eigenvectors:

with a complete set of eigenvectors:

JordanDecomposition[m] returns matrices ![]() built from eigenvalues and eigenvectors:

built from eigenvalues and eigenvectors:

The ![]() matrix has the eigenvectors as its columns, possibly in a different order from Eigenvectors:

matrix has the eigenvectors as its columns, possibly in a different order from Eigenvectors:

SchurDecomposition[n,RealBlockDiagonalFormFalse] for a numerical normal matrix ![]() :

:

The matrix q is built from eigenvectors, possibly in a different order from Eigenvectors:

To verify that q has eigenvectors as columns, set the first entry of each vector to 1. to eliminate phase differences between q and v:

If matrices share a dimension‐![]() null space, Eigenvectors[m1,m2] returns

null space, Eigenvectors[m1,m2] returns ![]() zero vectors:

zero vectors:

The generalized eigenvalues of ![]() with respect to itself are padded with two zero vectors:

with respect to itself are padded with two zero vectors:

The matrix ![]() has a one-dimensional null space:

has a one-dimensional null space:

It lies in the null space of ![]() :

:

Thus, the generalized eigenvectors of ![]() with respect to

with respect to ![]() are padded with one zero vector:

are padded with one zero vector:

Possible Issues (5)

Eigenvectors and Eigenvalues are not absolutely guaranteed to give results in corresponding order:

The sixth and seventh eigenvalues are essentially equal and opposite:

In this particular case, the seventh eigenvector does not correspond to the seventh eigenvalue:

Instead it corresponds to the sixth eigenvalue:

Use Eigensystem[mat] to ensure corresponding results always match:

The general symbolic case quickly gets very complicated:

The expression sizes increase faster than exponentially:

Not all matrices have a complete set of eigenvectors:

Use JordanDecomposition for exact computation:

Use SchurDecomposition for numeric computation:

Construct a 10,000×10,000 sparse matrix:

The eigenvector matrix is a dense matrix and too large to represent:

Computing the few eigenvectors corresponding to the largest eigenvalues is much easier:

When eigenvalues are closely grouped, the iterative method for sparse matrices may not converge:

The iteration has not converged well after 1000 iterations:

You can give the algorithm a shift near an expected eigenvalue to speed up convergence:

Tech Notes

Related Guides

History

Introduced in 1988 (1.0) | Updated in 1996 (3.0) ▪ 2003 (5.0) ▪ 2014 (10.0) ▪ 2015 (10.3) ▪ 2017 (11.1) ▪ 2024 (14.0)

Text

Wolfram Research (1988), Eigenvectors, Wolfram Language function, https://reference.wolfram.com/language/ref/Eigenvectors.html (updated 2024).

CMS

Wolfram Language. 1988. "Eigenvectors." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2024. https://reference.wolfram.com/language/ref/Eigenvectors.html.

APA

Wolfram Language. (1988). Eigenvectors. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/Eigenvectors.html

BibTeX

@misc{reference.wolfram_2025_eigenvectors, author="Wolfram Research", title="{Eigenvectors}", year="2024", howpublished="\url{https://reference.wolfram.com/language/ref/Eigenvectors.html}", note=[Accessed: 14-February-2026]}

BibLaTeX

@online{reference.wolfram_2025_eigenvectors, organization={Wolfram Research}, title={Eigenvectors}, year={2024}, url={https://reference.wolfram.com/language/ref/Eigenvectors.html}, note=[Accessed: 14-February-2026]}